How Much Does “User Interviews” Cost in 2026?

If you want a clean, practical breakdown, here it is (still thinking in total cost per completed interview, not just platform fees).

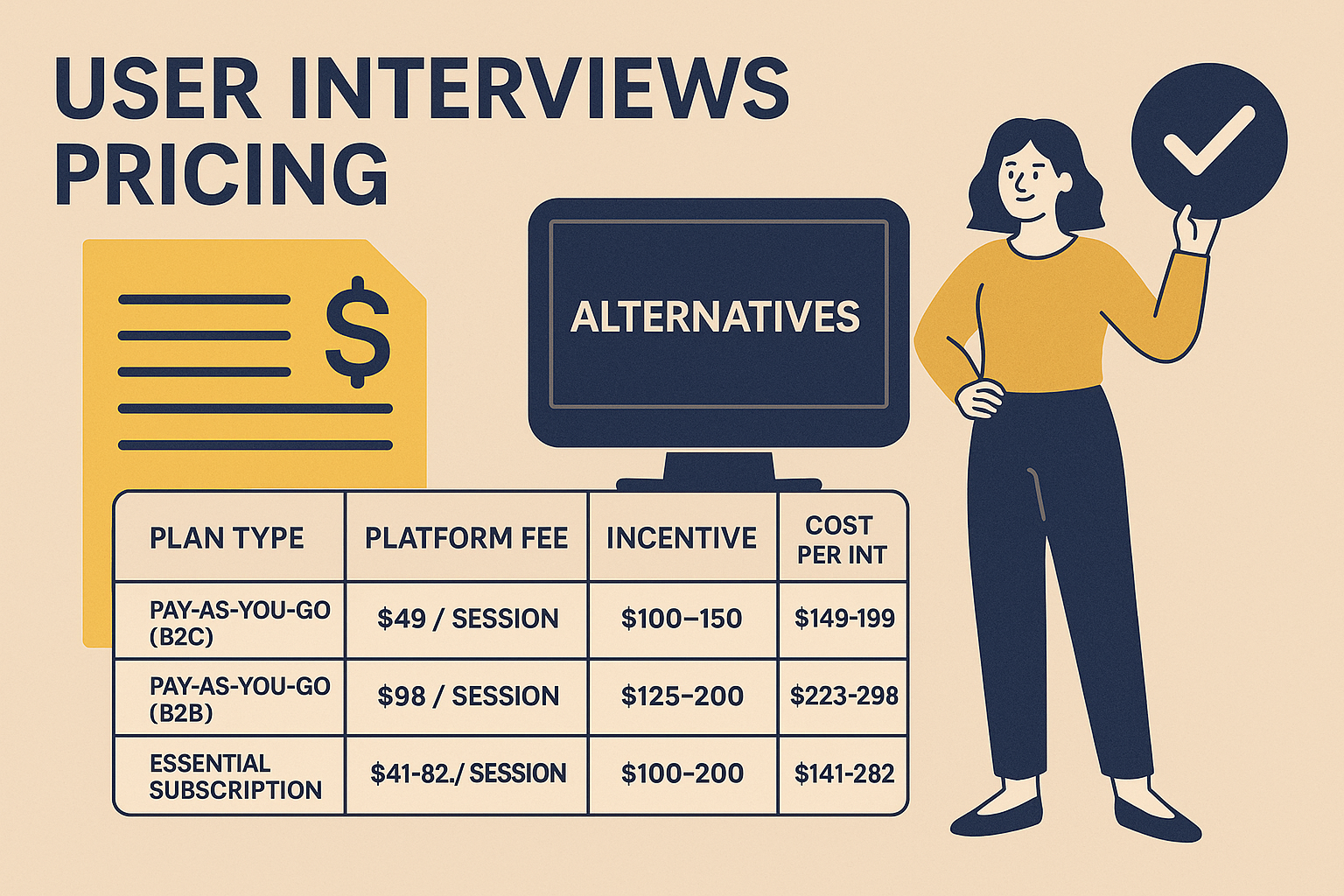

User Interviews Pricing in 2026 (Typical Ranges)

| Plan Type |

Platform Fee |

Incentive (Typical) |

Total Cost per Interview |

| Pay‑As‑You‑Go (B2C) |

$49 / session |

$100–150 |

~$149–199 |

| Pay‑As‑You‑Go (B2B) |

$98 / session |

$125–200 |

~$223–298 |

| Essential Subscription |

$41–82 / session |

$100–200 |

~$141–282 |

| Enterprise |

Custom ($30–75 est.) |

$100–200 |

~$130–275 |

These estimates assume you’re using User Interviews for panel access, screening, scheduling, and incentive handling.

The part people underestimate: platform + incentive is not your full cost. Coordination time, moderation time, transcription, and analysis can easily add $100+ per session (and much more if it’s a senior researcher or you’re doing rigorous coding).

Comparison Table: User Research Interview Platforms in 2026

| Platform | Interview Type | Pricing Structure | Strengths |

| User Interviews | Live moderated | $49–$98/session + incentive | Large panel, strong for niche B2B recruitment |

| UserCall | Async AI-moderated voice | $99–$299/month (flat rate) | No scheduling, scalable, transcripts + themes + summaries |

| Respondent | Live moderated | 50% of incentive (min $40) | Strong for professional/B2B recruiting |

| UserTesting | Moderated + unmoderated | $30k+/year (enterprise) | Usability tasks at scale, video recordings, enterprise workflow |

| PlaybookUX | Moderated + unmoderated | Starts ~ $267/mo | Good value, video interviews + scheduling, lightweight ops |

| Maze | Unmoderated | $1,500–15,000/year | Fast prototype/flow testing with analytics |

| Lyssna | Unmoderated | From $89/month | Quick preference tests and directional feedback |

User Interviews Alternatives in 2026

1) UserCall (Modern Async + AI-Powered)

If your team is tired of scheduling loops, no-shows, and manual analysis, UserCall flips the model:

- Run AI-moderated voice interviews async

- Upload a guide, collect responses within hours (not weeks)

- Built-in transcription + theming + summaries

- Scales across segments, time zones, and languages without adding ops load

Best for: product and UX teams needing fast turnaround, early validation, continuous discovery, churn/NPS follow-ups, and “always-on” qualitative insight.

2) Respondent

Strong for professional recruitment.

- Charges 50% of participant incentive (min $40)

- You still handle screening + scheduling

- Can get expensive at volume

Best for: high-value, hard-to-reach professionals for live interviews.

3) UserTesting

Enterprise-grade usability and reaction testing.

- Great for usability tasks, prototype feedback, and large-scale unmoderated studies

- Expensive, typically annual contracts

Best for: enterprise UX orgs running constant usability testing.

4) PlaybookUX

Good “all-around” option with more approachable pricing.

- Moderated + unmoderated

- Supports screen sharing and scheduling workflows

- Often includes transcription/tagging basics

Best for: startups or agencies that want a single tool for interviews + usability.

5) Maze + Lyssna

Both are excellent for rapid, unmoderated UX feedback.

- Maze: product flows, task success, prototype testing

- Lyssna: preference testing, first-click tests, fast directional signals

Best for: designers/PMs who need speed and direction, not deep interviews.

Which One Should You Choose in 2026?

| Your Goal | Best Option | Why |

| Live, in-depth interviews with niche users | User Interviews / Respondent | Best panel access for hard targets + classic moderation |

| Async, fast insights with near-zero ops | UserCall | No scheduling, no moderation, auto themes + summaries |

| Remote usability testing | UserTesting / Maze | Task flows, screen capture, scalable unmoderated UX |

| Budget-friendly mix of moderated + unmoderated | PlaybookUX | Good balance of cost and capability |

| Fast design preference checks | Lyssna | Quick and lightweight, great for direction |

Real-Life Research Example

Last quarter, I ran a concept test with two teams.

Team A (User Interviews):

- 20 interviews

- ~2 weeks scheduling

- several no-shows

- hours of manual transcription cleanup + coding

Team B (UserCall):

- uploaded the script

- received voice responses within 48 hours

- themed summaries generated shortly after

AI doesn’t replace high-stakes, human-led depth interviews. But for early validation, continuous discovery, and speed-sensitive projects, async AI interviews can cut total cost dramatically and remove coordination bottlenecks.

Final Takeaways (2026)

- User Interviews is still great for classic, live interviews, especially niche B2B. But costs can quickly land in the $200–300/interview range once incentives and time are included.

- UserCall is a fast async alternative for teams that want depth without scheduling, plus built-in transcription and analysis.

- Respondent, UserTesting, PlaybookUX, Maze, and Lyssna each win in specific scenarios depending on control, speed, and budget.

- Most high-performing teams blend tools: live interviews for depth exploration, async AI interviews for scale and rapid iteration.